Difference between revisions of "Usage of a HPC cluster"

Jump to navigation

Jump to search

| Line 19: | Line 19: | ||

* the above cluster structure is used from commodity local machines to large scale HPC architectures (as shown below for the Summit machine at Oak-Ridge Natl Lab (ORNL), TN, USA) | * the above cluster structure is used from commodity local machines to large scale HPC architectures (as shown below for the Summit machine at Oak-Ridge Natl Lab (ORNL), TN, USA) | ||

| − | [[File:Summit | + | [[File:Summit-ONL.jpg|300px|top]] |

==Connecting== | ==Connecting== | ||

Revision as of 09:17, 30 April 2021

- Prev: LabQSM#Getting Started

- Next: Scripting

Here we collect some general (and by no means complete) information about usage and policies of a HPC cluster.

Structure of a HPC cluster

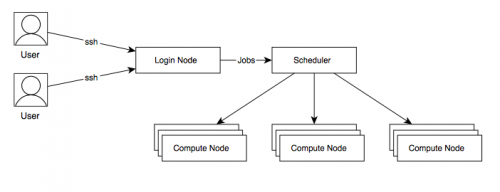

The structure of a HPC system is sketched in the picture above. These are the main logical building blocks:

- a login node is exposed to users for access (typically via

ssh), - a dedicated scheduler (the queuing system) dispatches computational jobs to the compute nodes

- computation happens therefore asynchronously (in batch mode), and not on the login node.

- a specific software environment is provided on the login node and on the compute node to run parallel jobs

- the above cluster structure is used from commodity local machines to large scale HPC architectures (as shown below for the Summit machine at Oak-Ridge Natl Lab (ORNL), TN, USA)

Connecting

Unless other means are provided, you typically connect using the ssh protocol.

From a shell terminal or a suitable app:

ssh -Y <user>@<machine_host_name> or ssh -Y -l <user> <machine_host_name>

<user>: Unix username on the cluster login node