Difference between revisions of "Usage of a HPC cluster"

| (11 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | |||

| + | |||

| + | * Prev: [[LabQSM#Getting Started]] | ||

| + | * Next: [[Scripting]] | ||

Here we collect some general (and by no means complete) information about usage and policies of a HPC cluster. | Here we collect some general (and by no means complete) information about usage and policies of a HPC cluster. | ||

| Line 4: | Line 8: | ||

==Structure of a HPC cluster== | ==Structure of a HPC cluster== | ||

| + | [[File:Logical structure HPC cluster.png|500px|top]] | ||

| + | The '''structure of a HPC system''' is sketched in the picture above. | ||

| + | These are the main logical building blocks: | ||

| + | |||

| + | * a ''login node'' is exposed to users for access (typically via <code>ssh</code>), | ||

| + | * a dedicated ''scheduler'' (the ''queuing system'') dispatches computational jobs to the ''compute nodes'' | ||

| + | * computation happens therefore asynchronously (in batch mode), and not on the login node. | ||

| + | * a specific ''software environment'' is provided on the login node and on the compute node to run parallel jobs | ||

| + | * the above cluster structure is used from commodity local machines to large scale HPC architectures (as shown below for the Summit machine at Oak-Ridge Natl Lab (ORNL), TN, USA) | ||

| + | |||

| + | [[File:Summit-ONL.jpg|300px|top]] | ||

==Connecting== | ==Connecting== | ||

| Line 15: | Line 30: | ||

<user>: Unix username on the cluster login node | <user>: Unix username on the cluster login node | ||

| + | <machine_host_name>: hostname (or IP) of the target HPC machine | ||

| + | |||

| + | In order to access you need to have a working Unix username and passwd on the target machine. | ||

| + | |||

| + | ==Scheduler== | ||

| + | |||

| + | Multiple schedulers are available. Examples include PBS Torque, IBM LoadLeveler and SLURM. | ||

| + | Here we just cover some examples, providing the main commands to be used within the shell. | ||

| + | |||

| + | ===PBS Torque=== | ||

| + | * submitting a job | ||

| + | qsub file.sh | ||

| + | |||

| + | * monitoring a job | ||

| + | qstat # general query, all jobs are shown | ||

| + | qstat -u $USER # only my jobs | ||

| + | qstat JID | ||

| + | |||

| + | * checking queue properties | ||

| + | qstat -q | ||

| + | |||

| + | * deleting a job | ||

| + | qdel JID | ||

| + | |||

| + | JID (Job ID) is given by qstat | ||

| + | |||

| + | * Interactive use on compute nodes | ||

| + | qsub -I -q <queue_name> | ||

| + | |||

| + | ==Environment== | ||

| + | |||

| + | Use the <code>module</code> command to manage the unix environment | ||

| + | |||

| + | Make sure you load a proper parallel environment (incl MPI-aware compilers) | ||

| + | |||

| + | One example: | ||

| + | |||

| + | module purge | ||

| + | module load psxe_2020 # Intel 2020 compiler | ||

| + | module load <more modules you are interested in, e.g. incl some QE distribution> | ||

| + | |||

| + | These commands can be also place in the .bashrc file and most of the time need to be present | ||

| + | in submission scripts | ||

| + | |||

| + | In order to enquire the module system: | ||

| + | module list # lists loaded modules | ||

| + | module avail # lists available modules | ||

Latest revision as of 09:37, 30 April 2021

- Prev: LabQSM#Getting Started

- Next: Scripting

Here we collect some general (and by no means complete) information about usage and policies of a HPC cluster.

Structure of a HPC cluster

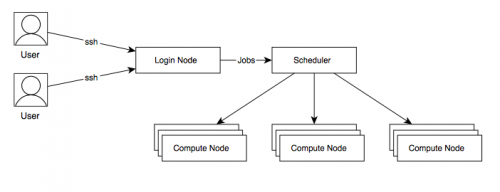

The structure of a HPC system is sketched in the picture above. These are the main logical building blocks:

- a login node is exposed to users for access (typically via

ssh), - a dedicated scheduler (the queuing system) dispatches computational jobs to the compute nodes

- computation happens therefore asynchronously (in batch mode), and not on the login node.

- a specific software environment is provided on the login node and on the compute node to run parallel jobs

- the above cluster structure is used from commodity local machines to large scale HPC architectures (as shown below for the Summit machine at Oak-Ridge Natl Lab (ORNL), TN, USA)

Connecting

Unless other means are provided, you typically connect using the ssh protocol.

From a shell terminal or a suitable app:

ssh -Y <user>@<machine_host_name> or ssh -Y -l <user> <machine_host_name>

<user>: Unix username on the cluster login node <machine_host_name>: hostname (or IP) of the target HPC machine

In order to access you need to have a working Unix username and passwd on the target machine.

Scheduler

Multiple schedulers are available. Examples include PBS Torque, IBM LoadLeveler and SLURM. Here we just cover some examples, providing the main commands to be used within the shell.

PBS Torque

- submitting a job

qsub file.sh

- monitoring a job

qstat # general query, all jobs are shown qstat -u $USER # only my jobs qstat JID

- checking queue properties

qstat -q

- deleting a job

qdel JID

JID (Job ID) is given by qstat

- Interactive use on compute nodes

qsub -I -q <queue_name>

Environment

Use the module command to manage the unix environment

Make sure you load a proper parallel environment (incl MPI-aware compilers)

One example:

module purge module load psxe_2020 # Intel 2020 compiler module load <more modules you are interested in, e.g. incl some QE distribution>

These commands can be also place in the .bashrc file and most of the time need to be present in submission scripts

In order to enquire the module system:

module list # lists loaded modules module avail # lists available modules